Video games: posing in 3D

- Salle de presse

07/27/2022

- UdeMNouvelles

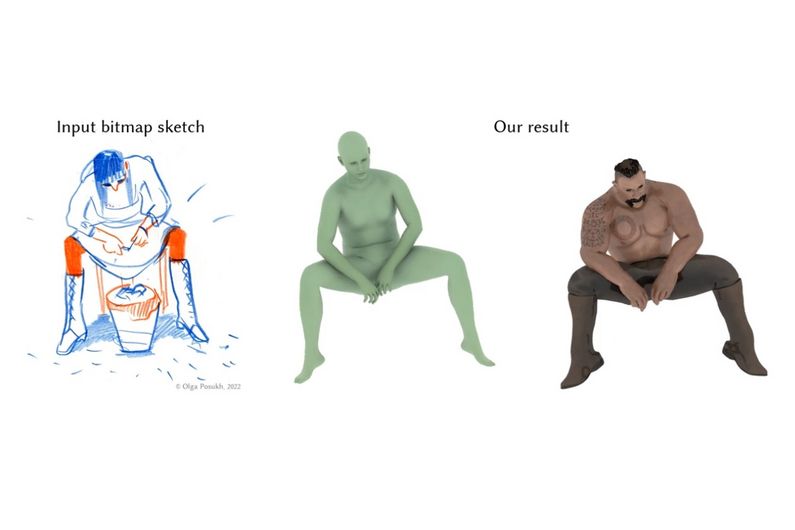

An UdeM computer scientist and his PhD student have developed a tool for animators to use bitmap sketches to control how a character stands and moves in three dimensions.

What’s the best way to get 3D characters in videogames to look real and expressive? Two computer scientists at Université de Montréal have come up with answer: use simple bitmap sketches to make their poses more lifelike.

Assistant professor Mikhail Bessmeltsev and his PhD student Kirill Brodt have developed an animation tool that uses drawings to control how videogame characters stand and move in three dimensions.

The duo’s study is published on July 22 in ACM Transactions on Graphics. We asked them to tell us more.

What was the challenge you faced in coming up with this new tool?

Artists frequently capture character poses via raster sketches; the process is called storyboarding. They then use these drawings as a reference while posing a 3D character in their software. This is a time-consuming process that requires specialized 3D training and mental effort. What we’ve done is create the first software to automatically infer a 3D character pose from a single bitmap sketch, producing poses that are consistent with what the viewer expects to see.

What do you do to overcome these problems?

We address them by predicting three key elements of a drawing that are needed to disambiguate the drawn poses: 2D bone tangents (how is each body part rotated in the sketch?), self-contacts (is the left hand touching the head?), and bone foreshortening. We then look for a 3D pose that has all those elements exactly preserved via an optimization. We validate our method by showing that the final 3D character’s pose is the pose an observer sees in a sketch.

So you make the poses seem more expressive, and more “real,” that way?

That’s right --- sketches are sometimes simply an easier way to capture an expressive pose than a 3D software, especially for classically trained artists. We try to keep that expressivity and realism in our algorithm. We do that by balancing the visual cues from artistic literature and by using visual perception research to compensate for distorted proportions in the characters that are generated. We validate our method via numerical evaluations, user studies and comparisons to manually posed characters and previous work and demonstrate a variety of results.

How could this tool be useful to animators?

We hope that it can become a part of the animation pipeline and become a big time-saver. With a single natural bitmap sketch of a character, our algorithm allows the animator to automatically, with no additional input, apply the drawn 3D pose to a custom ‘rigged’ and ‘skinned’ 3D character. That essentially means that animators can now create a first rough draft of the animation right after the storyboarding stage, i.e., when they have just sketched the keyframes.

What videogames do you have in mind as targets, and do you think consumers will notice the improvement?

We hope it will be used both in animation and gaming industries, but if we talk about games specifically, pretty much any game with animated characters is a target. We think that our system can be used, if desired, together with physics-based animation or motion capture technologies that are popular in action games. We would like to see our algorithm help animators, allowing them to devote more time to the creative process.

About this study

“Sketch2Pose: estimating a 3D character pose from a bitmap sketch” by Kirill Brodt and Mikhail Bessmeltsev, was published on July 22, 2022, in ACM Transactions on Graphics.

Media contact

-

Jeff Heinrich

Université de Montréal

Tel: 514 343-7593