Tasking the 'bots

- UdeMNouvelles

03/28/2023

- Jeff Heinrich

In a third-floor laboratory at UdeM's Department of Computer Science, Glen Berseth and his students are busy putting two robotic arms, a quadruped and a motorized toy truck through their paces.

In a computer-science laboratory at Université de Montréal, Glen Berseth and his graduate students are putting three types of robots to work.

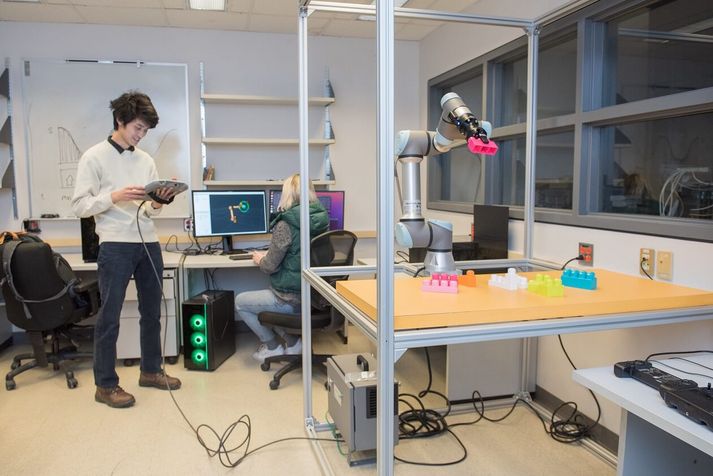

A group of robotic arms are busy assembling structures with Lego blocks and to clean and fold laundry inside an open 'cage' of aluminum bars.

A quadruped moves around on the floor, outfitted with an arm of its own to push objects around the room, like a dog playfully nudging things with its paw.

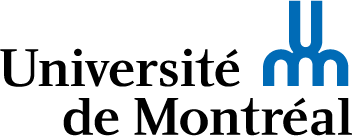

And a four-wheeled vehicle that looks like an oversized yellow Tonka truck gets around with the help of cameras on its front and back and a tower of sensors on its roof.

Using artificial intelligence, all three types are being trained to recognize the tasks they’re asked to perform, so that one day they can be put to practical use in the household – folding laundry, say, or preparing breakfast.

Choose carefully, then design

"The first step was buying the robotics hardware and choosing the parts carefully; now we're designing the tasks that the robots are going to learn," explained Berseth, an assistant professor, member of Mila and co-director of his department's Robotics and Embodied AI Lab. "We've got the robots, but how do we tell them they did a good job, that they're doing the things we want them to do?"

A robotic arm, for example, "has cameras to guide it but gradually we'll use AI to train it to recognize what it needs to do. First, it'll see pictures of folded laundry, then it will try to fold them, and only when it actually does a good job will it get a digital 'treat' from us – a '1' vs a '0', on the computer – to reinforce that good behaviour, making it more likely to succeed again the next time. Then we repeat that with all sorts of laundry."

The other arm, by contrast, is being trained to move objects around on a table whatever colour the table happens to be; "in other words, it's being trained to ignore surface colour," said Berseth. And the quadruped is being trained to navigate "in places like Montreal where apartments aren't always flat ground, where there are steps, and where, outside in the streets in the spring, there are lots of potholes."

Berseth and his students assembled the robots to be versatile, going beyond the singular tasks they were designed to do, such as moving products to a rack in a warehouse. They want them to 'see' the space they're in and act accordingly.

"Robots now don't really use camera input,"Berseth pointed out. "On an automobile assembly line, for instance, they're essentially blind and just repeat exactly what they're told to do, with their eyes closed and without error. But that's not how our world really works. There's much more we'd like the robots to be able to do, to really use the information they're collecting. We want the robot to react the same way people do."

12 grad students at work

In his department's labs on the third floor of the André Aisenstadt building, Berseth has six graduate students working on the robots (three for the arms, three for the vehicle) and another six on the AI side of things, on reinforcement learning. On the day we visited, robotics software engineer Kirsty Ellis was busy at the lab's desktop computer, controlling one of the robotic arms with a planning software, helping it move plastic building blocks to various 'goal positions' in the cage, while first-year PhD student Albert Zhan stood by with a handheld power console, monitoring the arm's progress.

The researchers are also working on a version of this installation that students can take home and work with themselves in their day-to-day life. Zhan, for instance, hopes to do some cooking with it: "Nothing complicated – maybe something like scrambling eggs," he said, "or taking portions that have already been measured out and getting it to just throw everything into the pan to cook." In a neighbouring lab, meanwhile, master's student Miguel Saavebra and postdoc Steven Parkison are busy running the robotic truck through some tests, trying to see how it can best navigate around the room.

"We feed it an image of something and, using only that, it navigates towards it," explained Saavebra, prepping for a presentation of his thesis project in Detroit, at the annual IROS conference on robotic intelligence. "On one computer screen you see a live 3D representation of the room captured by the sensors, and on the other you see the actual 'physical-world' views through the cameras. By having both, we can evaluate how the machine-learning part of the test is working and see if the robot is doing what we want it to do."

Offline memory can enrich learning

The robots at UdeM "keep a good chunk of offline memory," Berseth noted. "They have an image of what they did before and what it looks like, so they'll do something similar to what they've got in their database. And these days, we're using machine learning to take the next step: push all that information into a model where the machines learn, instead of just running through a sequence of pictures. They don't need to search in their database; that's already built into the model."

Right out of the box when it arrived in the lab, the four-wheeler could navigate around with the sensors it already had, "but we want to get it to also be able to navigate via the images we feed it, which would be more like the real world – and with AI, it should be able to do that," said Saavebra. Added Berseth: "We want it to be a little more user-friendly. For now, text is starting to become useful, even if you have to type something, but audio is trickier: there's a lot of pauses, and people have different pitch."

"Ultimately," concluded Saavebra with a laugh, "I'd love to give the robot a pair of arms and legs and get it to do the dishes for me, things like that. The boring tasks, in other words."